Introduction

Understanding AI detection scores can feel confusing, especially when you’re responsible for publishing content that must meet strict quality, SEO, and compliance standards. Many publishers, agencies, and site owners see a percentage on an Originality.ai report and immediately assume it works like plagiarism detection — but that’s not how AI scores function. How to interpret AI scores on your Originality.ai report, understand AI vs human probability, and make smarter, risk-based content publishing decisions.

This guide breaks down exactly how to interpret AI scores on your Originality.ai report, what those percentages really mean, and how you can use them to make smart, risk‑based content decisions. This post is based on insights from the Originality.ai support perspective and is written to help you confidently evaluate your content.

If you publish content at scale and want confidence in what you’re putting live, Originality.ai is one of the most reliable AI detection platforms available today. It helps publishers understand AI risk clearly without confusing percentages or false assumptions. 👉 Join now on Originality.ai and take control of your content quality decisions.

What Is the Purpose of AI Scores in Originality.ai?

Originality.ai is designed with a clear mission: to empower publishers to make informed, risk-based decisions about AI-generated content.

Unlike traditional plagiarism checkers that look for copied text, AI detection focuses on probability, not certainty. The goal isn’t to label content as “good” or “bad,” but to help you assess how likely AI wrote a piece of content versus a human.

This is especially important for:

- Content publishers

- SEO agencies

- Affiliate site owners

- SaaS companies

- Editorial teams managing multiple writers

AI detection is not about punishment — it’s about informed decision‑making. Before analyzing AI scores, having access to a reliable detection platform is essential. Creating an account allows you to scan content, review AI probability scores, and make informed, risk-based publishing decisions. If you haven’t already, follow our step-by-step guide to Sign Up for an Originality.ai Account and start evaluating your content with clarity and confidence.

Why AI Scores Are Often Misunderstood

From a support and analysis standpoint, one of the most common issues users face is misinterpreting AI percentages.

Many users assume:

- An AI score works like plagiarism detection

- A percentage refers to how much of the content is AI‑generated

- Any non‑zero AI score means the content is “unsafe”

None of these assumptions are accurate.

AI scores are probability indicators, not content breakdowns.

For content publishers, agencies, and SEO teams, relying on guesswork is risky. Originality.ai provides probability-based AI scores that support smarter editorial decisions rather than binary judgments. 👉 Sign up now on Originality.ai and evaluate your content with clarity and confidence.

AI Detection Is Not Binary

Traditional detection systems are usually binary:

- Plagiarized or not

- Copied or original

AI detection does not work this way.

Originality.ai provides a likelihood score, which represents how confident the system is that the content was written by AI or by a human.

This means:

- There is no absolute yes or no

- Scores exist on a spectrum

- Context matters

Understanding this concept is the key to correctly interpreting your report.

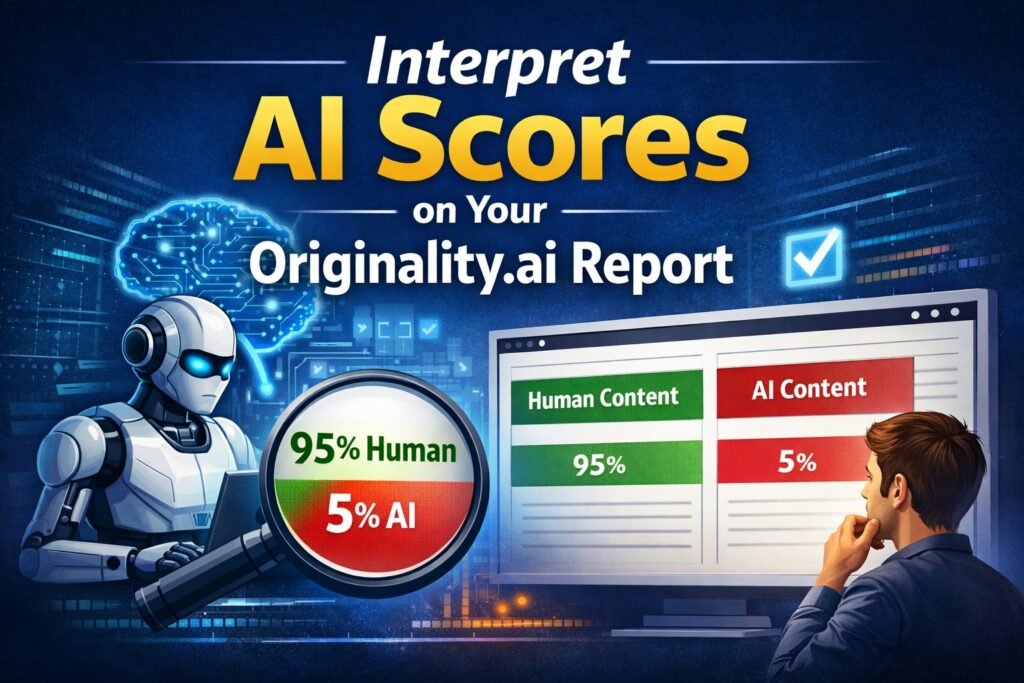

Breaking Down AI vs Human Scores

Every Originality.ai report presents two core values:

- AI score

- Human score

These numbers always add up to 100%.

Example Explained

If your report shows:

- AI score: 5%

- Human score: 95%

This does not mean that 5% of the content was generated by AI.

Instead, it means:

There is a 95% likelihood that the content is human‑written.

This distinction is critical. Unlike traditional detectors, Originality.ai is built for risk-based content evaluation, making it ideal for websites that balance AI assistance with human expertise. It empowers you to decide what’s acceptable for your brand. Get started with Originality.ai today and publish with confidence.

What an AI Score Does Not Mean

Let’s clear up some of the most common misconceptions.

An AI score does not:

- Indicate how many sentences are AI‑written

- Identify exact AI‑generated sections

- Mean the content violates search engine policies

- Automatically signal low quality

It is simply a statistical confidence indicator.

Why Even Human Content Can Show AI Scores

Many users are surprised when content written by humans still shows a small AI percentage. This is completely normal.

Reasons include:

- Structured writing styles

- Clear, concise language

- Repetitive phrasing

- Topic familiarity

- SEO‑optimized formats

Modern human writing — especially professional or SEO‑driven content — can sometimes resemble AI patterns. That doesn’t mean the content is actually AI‑generated.

Understanding Risk-Based Decision Making

Originality.ai is built around risk tolerance, not rigid thresholds.

This means you decide what level of risk is acceptable based on:

- Your website niche

- Your monetization method

- Your clients or advertisers

- Search engine exposure

- Brand reputation

There is no universal “safe” or “unsafe” number.

Low AI Scores (0%–10%)

Content in this range is very likely human‑written.

Typical use cases:

- Editorial articles

- Expert blog posts

- Thought leadership content

- Manually written SEO pages

For most publishers, this range represents minimal risk.

Moderate AI Scores (10%–30%)

This range often confuses but is not automatically problematic.

Possible explanations:

- AI‑assisted drafting with heavy human editing

- Use of templates or outlines

- Highly structured informational content

At this level, many publishers:

- Perform manual reviews

- Add personal insights

- Improve tone variation

Risk depends on how strict your publishing standards are.

Higher AI Scores (30%–60%)

This range suggests a stronger likelihood of AI involvement.

Actions to consider:

- Review content originality

- Check for generic phrasing

- Add expert opinions or examples

- Improve sentence diversity

This doesn’t automatically mean content is unusable — but it may require refinement.

Very High AI Scores (60%+)

Scores in this range indicate a high probability of AI‑generated content.

For many publishers, this triggers:

- Rewrites

- Additional human editing

- Content rejection

- Manual author verification

Again, the response depends entirely on your risk tolerance. If you manage multiple writers or AI-assisted workflows, Originality.ai fits seamlessly into editorial review processes. It helps flag content for review without blocking quality writing unnecessarily. Create your account on Originality.ai now and streamline your content review process.

How Publishers Should Use AI Scores

AI scores are best used as a decision‑support tool, not a judgment system.

Best practices include:

- Comparing scores across writers

- Tracking patterns over time

- Reviewing borderline cases manually

- Combining AI scores with quality checks

AI detection works best when paired with human judgment.

AI Scores and Search Engine Concerns

One major fear among publishers is SEO penalties.

Important clarification:

- Search engines do not use Originality.ai scores

- AI detection tools are internal evaluation systems

- Quality, usefulness, and originality matter more than the writing method

AI scores help you assess risk — they do not represent external enforcement.

Using AI Scores for Editorial Policies

Many teams use Originality.ai to build clear content guidelines, such as:

- Acceptable AI score ranges

- Mandatory human editing steps

- Disclosure requirements

- Review workflows

This creates consistency without banning AI outright.

AI-Assisted vs AI-Generated Content

Another important distinction is between:

- AI‑generated content (minimal human input)

- AI‑assisted content (human‑led, AI‑supported)

AI scores help you identify where content falls on this spectrum — but context always matters.

If you want more reliable and consistent results, it’s important to understand how writing style, editing depth, and content structure affect AI detection outcomes. Making small improvements can significantly reduce false positives and improve score accuracy. For a deeper breakdown, check out our detailed guide on Improve AI Detection Accuracy in Originality.ai, where we explain practical steps publishers can apply to refine their content evaluation process.

Why There Is No Perfect Score

Language is complex. Writing styles overlap. AI models evolve.

Because of this:

- No AI detector is 100% certain

- Scores are probabilistic by nature

- Interpretation is more important than the number itself

The real value lies in how you use the data.

How We Help You with AI Scores

At Axiabits, we help businesses and content creators make the most of AI-powered tools like Originality.ai. Our services include:

- AI Content Analysis & Interpretation: We guide you in understanding AI scores and reports to ensure your content meets originality standards.

- Plagiarism & AI Detection Solutions: Protect your website and content by detecting AI-generated or copied content efficiently.

- Content Optimization Consulting: Improve your writing and SEO strategy based on AI insights to enhance engagement and performance.

- Training & Workshops: Learn how to use AI content tools like Originality.ai effectively for your team or business.

- Custom Reporting & Recommendations: Receive personalized reports and actionable recommendations to maintain high-quality, original content.

Book now and let’s get started!

Final Thoughts: Interpreting Scores with Confidence

Originality.ai AI scores are not meant to scare or restrict publishers. They exist to provide clarity, transparency, and control.

Remember:

- AI scores show likelihood, not percentages of content

- A low AI score does not guarantee human writing

- A higher AI score does not automatically mean failure

- Your risk tolerance defines your action

When understood correctly, Originality.ai becomes a powerful ally in maintaining content integrity while adapting to the evolving role of AI in publishing. As AI-generated content becomes more common, having a reliable detection system is essential for long-term SEO and brand trust. Originality.ai gives you data-driven insights to maintain high editorial standards. 👉 Try Originality.ai today and protect your content strategy.

Disclaimer

This article features affiliate links, which indicate that if you click on any of the links and make a purchase, we may receive a small commission. There’s no additional cost to you, and it helps support our blog so we can continue delivering valuable content. We endorse only products or services we believe will benefit our audience.

Frequently Asked Questions

What does the AI score on an Originality.ai report actually mean?

The AI score represents the likelihood that a piece of content was generated by artificial intelligence. It does not show how much of the content is AI-written. For example, an AI score of 10% means there is a 10% probability the content is AI-generated and a 90% probability it is human-written.

Does a low AI score guarantee that content is human-written?

No. AI detection is probabilistic, not definitive. A low AI score strongly suggests human authorship, but it does not provide a 100% guarantee. Originality.ai is designed to support decision-making, not provide absolute proof.

Is there a “safe” AI score for SEO and publishing?

There is no universal safe or unsafe AI score. Acceptable ranges depend on your risk tolerance, website niche, and editorial standards. Many publishers are comfortable with low to moderate AI scores as long as content quality, originality, and usefulness are high.

Why does human-written content sometimes show an AI score?

Human-written content can show AI scores due to structured writing, SEO optimization, repetitive phrasing, or clear informational formats. These patterns can resemble AI-generated text, even when the content is written entirely by humans.

Should I rewrite content with a moderate AI score?

Not always. Moderate AI scores often indicate AI-assisted content or highly structured writing. Instead of rewriting immediately, many publishers choose to manually review, add personal insights, improve sentence variety, or include expert examples.